What is Drone Photogrammetry?

- Dokimi GeoEngineering

- Sep 21, 2021

- 3 min read

At its most basic, “photogrammetry” is measuring real distances via photos. But not just photos—A LOT of photos. More photos than you probably think is necessary. Think your parents taking pictures of you and your date before leaving for prom. You need to every angle possible.

Or, at least, you need enough photos taken from the optimal height to get you the best ground sample distance. In fact, you need an 80% overlap on each picture. This is necessary for two reasons:

For the computer to stitch images together to make the orthophoto (2D aerial image that’s been corrected for distortion).

To capture enough angles to create a digital terrain model (the shape of your surface).

When combined, the orthophoto and DTM create the 3D model of your site.

The gist of the science: How does drone photogrammetry work?

If you see the same feature from three or more known positions, you can triangulate its location in space. In other words, you can capture its horizontal (x,y) and vertical (z) coordinates. A feature is any visually distinct point in an image.

If you took an average image from your survey, you’d easily be able to pick out many “features” between images. The more features you match, the better you can relate images to each other and reconstruct objects within them. This is exactly what photogrammetry software does for one feature, and the next, and the next, and so on, until it’s covered your entire site.

Once you have a lot of these features—think millions—you can create a “cloud” of points. Each point has a matched feature describing your surveyed area in that location. You can then turn your point cloud into any regular outputs used in geospatial software, like a 3D mesh.

You’re using photogrammetry right now!

The best way to visualize this is to use your eyes—literally. Your eyeballs are using photogrammetry all the time.

You have two eyes (two cameras), processing a live feed of your surroundings. Because your eyes are slightly apart, you’re getting two different inputs at slightly different angles. (Test this yourself by holding up a finger in front of your face. Look at it with one eye closed, then the other. You’ll notice your finger jumps relative to background objects.)

Your brain knows how far apart your eyes are. This allows it to process this info into a sense of distance by merging both feeds into a single perspective. (If you’ve ever tried to catch a ball with one eye closed, you know the lack of depth perception makes it difficult.)

Your mind is rendering a live depth map of the 3D world from two 2D inputs—just like how Propeller renders a 3D survey from many 2D photos.

Drone photogrammetry used in surveying workflows—also processes geospatial information collected from the drone and ground control points. Doing so allows you to use your 3D survey to collect highly accurate real-world quantities—within 1/10 ft when done properly—with just a few clicks of your mouse.

The final picture: What do you get from a photogrammetric survey?

Once those steps are completed, you’ve got two things: a point cloud and an orthomosaic. The former is where all your “terrain” outputs—DXF mesh, geoTIFFs, etc.—come from. The orthomosaic is then layered over the elevation model, which gives you that final measurable, 3D model of your worksite.

Once you have those models, what you do with them is up to you. You can use industry-specific measurement tools to analyze your site or export the data for use in other GIS or CAD software. However you plan to use your drone data, photogrammetry will get you the information you need faster, and with better accuracy, than traditional surveying methods alone.

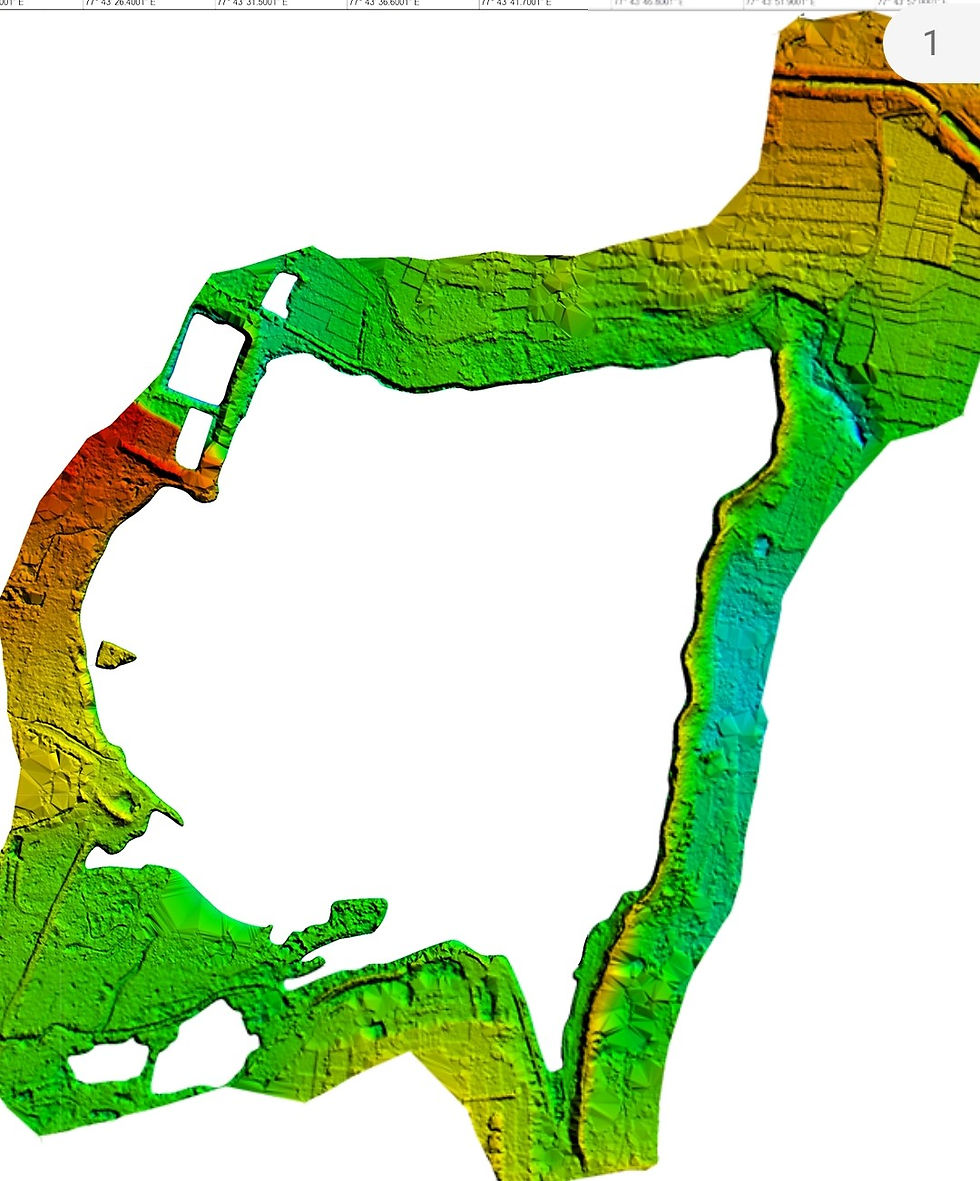

Some sample are attached below:

Comments